I’m down with a terrible cold this week, so I figured I’d just do a short update on the Buzzfeed article everyone’s sending me about the latest on Brian Wansink . The article does a pretty good job of recapping the situation up until now, so feel free to dive on in to the drama.

The reason this update is particularly juicy is because somehow Buzzfeed got a hold of a whole bunch of emails from within the lab, and it turns out a lot of the chicanery was a feature not a bug. The whole thing is so bad that even the Joy of Cooking went after Wansink today on Twitter, and DAMN is that a cookbook that can hold a grudge. Posting the whole thread because it’s not every day you see a cookbook publisher get in to it about research methodology:

Now normally I would think this was a pretty innocuous research methods dispute, but given Wansink’s current situation, it’s hard not to wonder if the cookbook has a point. Given what we now know about Wansink, the idea that he was chasing headlines seems a pretty reasonable charge.

However, in the (rightful) rush to condemn Wansink, I do want to make sure we don’t get too crazy here. For example, the Joy of Cooking complains that Wansink only picked out 18 recipes to look at out of 275. In and of itself, that is NOT a problem. Sampling from a larger group is how almost all research is done. The problem only arises if those samples aren’t at least somewhat random, or if they’re otherwise cherry picked. If he really did use recipes with no serving sizes to prove that “serving sizes have increased” that’s pretty terrible.

Andrew Gelman makes a similar point about one of the claims in the Buzzfeed article. Lee (the author) stated that “Ideally, statisticians say, researchers should set out to prove a specific hypothesis before a study begins.” While Gelman praises Lee for following the story and says Wansink’s work is “….the most extraordinary collection of mishaps, of confusion, that has ever been gathered in the scientific literature – with the possible exception of when Richard Tol wrote alone.” he also gently cautions that we shouldn’t go to far. The problem, he says, is not that Wansink didn’t start out with a specific hypothesis or that he ran 400 comparisons, it’s that he didn’t include that part in the paper.

I completely agree with this, and it’s a point everyone should remember.

For example, when I wrote my thesis paper, I did a lot of exploratory data analysis. I had 24 variables, and I compared all of them to obesity rates and/or food insecurity status. I didn’t have a specific hypothesis about which one would be significant, I just ran all the comparisons. When I put the paper together though, I included every comparison in the Appendix, clarified the number I did, and then focused on discussing the ones whose p values were particularly low. My cutoff was .05, but I used the Bonferri correction method to figure out which ones to talk about. That method is pretty simple….if you do 20 comparisons and want an alpha of less than .05, you divide .05 by 20 = .0025. I still got significant results, and I had the bonus of giving everyone all the information. If anyone ever wanted to replicate any part of what I did, or compare a different study to mine, they could do so.

Gelman goes on to point out that in many cases there really isn’t one “right” way of doing stats, so the best we can do is be transparent. He sums up his stance like this: “Ideally, statisticians say, researchers should report all their comparisons of interest, as well as as much of their raw data as possible, rather than set out to prove a specific hypothesis before a study begins.”

This strikes me as a very good lesson. Working with uncertainty is hard and slow going, but we have to make due with what we have. Otherwise we’ll be throwing out every study that doesn’t live up to some sort of hyper-perfect ideal, which will make it very hard to do any science at all. Questioning is great, but believing nothing is not the answer. That’s a lesson we all could benefit from. Well, that and “don’t piss off a cookbook with a long memory.” That burn’s gonna leave a mark.

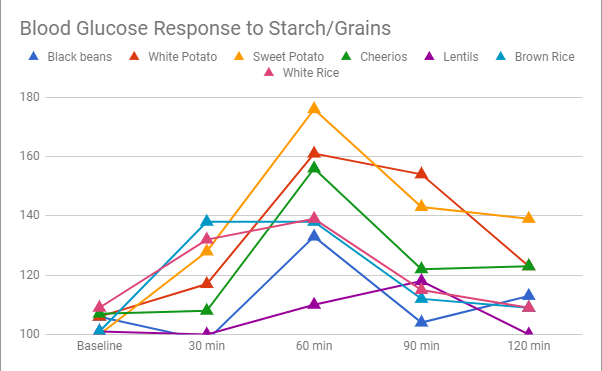

/cdn.vox-cdn.com/uploads/chorus_asset/file/9999425/chart2.png)