No, there haven’t been 18 school shootings so far this year. Parkland is a tragedy, but no matter what your position, spreading stats like these doesn’t help.

I’ve followed Neuroskeptic long enough to know I should be skeptical of fMRI studies, and this paper shows why: some studies trying to look at brain regions may be confounded by individual variation. In other words, what was identified as “change” may have just been individual differences.

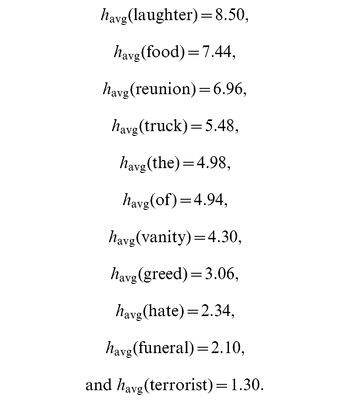

Speaking of questionable data, I’ve posted a few times about Brian Wansink and the ever-growing scrutiny of his work. This week his marquee paper was called in to question: the bottomless bowl experiment. This experiment involved diners with “self-refilling” bowls of tomato soup, and the crux of the finding is that without visual cues people tend to underestimate how much they’ve eaten. The fraud accusations were surprising, given that:

- This finding seems really plausible

- This finding pretty much kicked off Wansink’s career in the public eye

If this finding was based on fake data, it seems almost certain everything that ever came out of his lab is suspect. Up until now I think the general sense was more that things might have gotten sloppy as the fame of his research grew, but a fake paper up front would indicate a different problem.

Related: a great thread on Twitter about why someone should definitely try to replicate the soup study ASAP. Short version: the hypothesis is still plausible and your efforts will definitely get you attention.

Another follow-up to a recent post: AL.com dives in to Alabama school districts to see if school secession (i.e. schools that split off from a county system to a city controlled system) are racially motivated. While their research was prompted by a court ruling that one particular proposed split was racially motivated, they found that in general schools didn’t significantly change their racial or class makeup all that much when they split off from larger districts. What they did find was that cities who split off their schools ended up spending more per student than they did when they were part of a county system. This change isn’t immediate, but a few years out it was almost universally true. This suggests that taxpayers are more agreeable to increasing tax rates when they have more control over where the money is going. Additionally, the new schools tend to wind up more highly rated than the districts they left, and the kids do better on standardized testing. Interesting data, and it’s nice to see a group look at the big picture.

/cdn.vox-cdn.com/uploads/chorus_asset/file/9999425/chart2.png)